-

Products

Switches

Industrial Switches

4-TX + 1-FX 10/100/1000M IES 8*RJ-45 / 2*SFP IES 24*RJ-45 / 4*SFP+ Managed IES 48*RJ-45 / 6*SFP+ Managed IES 1-TX + 1-FX 10/100/1000M IES 2-TX + 1-FX 10/100/1000M IES 2-TX + 2-FX 10/100/1000M IES 4-TX + 2-FX 10/100/1000M IES 5TX 10/100/1000M IES 8TX 10/100/1000M IES 8-TX + 4-FX 10/100/1000M IES 16-TX + 2-FX 10/100/1000M IES 1-TX + 1-FX 10/100M IES

Media Converters

Copper to Fiber Gigabit Meida Converter 1-port RS-232/485/422 Serial Device Server 10/100M 1FX+ 2TX fiber media converter 10/100/1000BASE-TX TO 1000BASE-FX 9K LFP industrial Media Converter Small Type 10/100M Industrial Media Converter Small Type 10/100/1000M Industrial Media Converter 10G Copper to 10GBASE-X SFP+ Media Converter 10G SFP+ to SFP+ Fiber to Fiber Media Converter 10 / 100base-tx to 100Base-FX media converter 14-SLOT MEDIA CONVERTER CHASSIS 16-SLOT MEDIA CONVERTER CHASSIS MANAGED MEDIA CONVERTER(Internal Power Supply) MANAGED MEDIA CONVERTER( External Power Supply) -

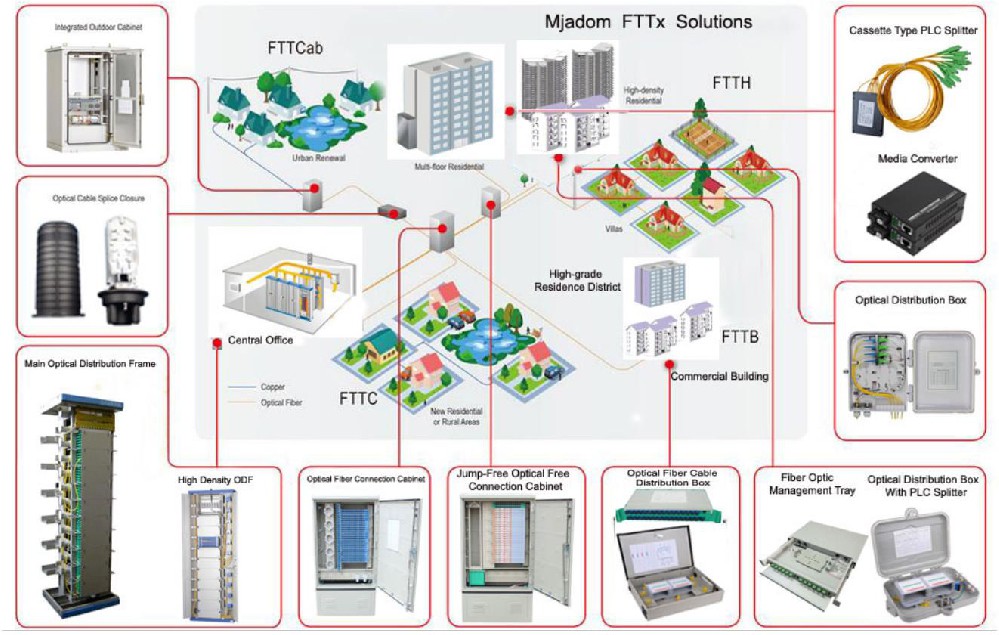

Solutions

Data CenterTelecom

- Insights

- About Us

- Contact Us

- Online Store

FAQ about AI Networks (Infiniband)

2023-11-24FAQ about AI Networks (Infiniband)

1. What is the role of network infrastructure in AI large model training scenarios?

For AI large model training, the ability of multi-GPU, multi-node collaborative work is essential. The industry consensus for GPU intercommunication is that NVLINK technology combined with IB (InfiniBand) network is the optimal choice for large model training. In the current DGX H800 reference architecture, NVLINK provides efficient intra-node GPU communication, while IB network enables inter-node GPU communication. The combination of both is necessary to establish a complete data connection for the entire training cluster. Therefore, for AI infrastructure, high-performance networking is a prerequisite for the high performance of a GPU cluster.

[Reference: NVIDIA DGX SuperPOD Reference Architecture for DGX H100](https://docs.nvidia.com/https:/docs.nvidia.com/dgx-superpod-reference-architecture-dgx-h100.pdf)

2. What are the differences between AI data centers and traditional data centers?

From a business needs perspective, AI training is tightly coupled, primarily relying on collective communication. In such communication, any network bottleneck can slow down the entire process, and any failure at a point can lead to the failure of the operation. In response, we recommend a non-blocking network architecture with a 1:1 ratio of network cards to GPUs.

Considering traffic, the massive collective communication leads to high bandwidth usage. High system I/O requirements are also seen in HPC scenarios. For network capabilities, high bandwidth access and low end-to-end latency are the best choices.

These aspects highlight the main differences between AI and traditional data center networks. Traditional cloud data centers use low bandwidth access (25G) and reasonable convergence ratios at each network layer, matching bandwidth to business throughput needs and being less sensitive to latency, mainly using generic TCP communication. AI data centers, on the other hand, feature high-bandwidth access (200G/400G) for computing nodes, non-blocking networks, and high I/O requirements sensitive to transmission latency, using more efficient RDMA communication and GPU direct RDMA technology for GPU intercommunication.

3. How to choose IB network products for corresponding computing power?

For A800, use 200G IB interfaces (HDR/NDR200); for H800, use 400G IB interfaces (NDR); for L40S, use 200G IB interfaces (HDR/NDR200).

4. How to choose between IB and RoCE network solutions? What are their differences?

Traditional RoCE technology has limitations in performance optimization for large-scale networks. In commercial scenarios, it can achieve performance tuning in smaller networks. Only with the strong R&D teams of large cloud companies and deep collaboration with manufacturers can RoCE clusters of hundreds of network interfaces be realized. For AI infrastructure networking, the scale exceeds the capacity of traditional commercial RoCE solutions. In some tests, the network bandwidth utilization rate was only 40%-50%.

NVIDIA Spectrum-X offers solutions based on AR and next-generation congestion control technologies, optimizing bandwidth utilization up to 95% and solving networking scale issues. It provides a commercial cloud vendor-level RoCE networking solution.

Infiniband has extensive experience in networking at the level of thousands of interfaces, requiring no special configuration or tuning. It is simple and easy to use in deployment and subsequent maintenance. In terms of network performance, it is superior to the general Ethernet solution, with an irreplaceable advantage in networking performance with the network-based computing technology SHARP.

5. Are there any recommended AI infrastructure solutions?

The DGX Superpod reference architecture provides the industry's most advanced GPU servers, complete and detailed network design references, and full software support best practices. You can visit the NVIDIA website for more information.

6. What does IB NDR specifically correspond to?

NDR products correspond to Quantum-2 switches (QM9700, QM9790), ConnectX-7 network cards (400G NDR, 200G NDR200), NDR cables (supporting connections within 5 meters with DAC/ACC copper cables, optical modules, and fibers), and DPU Bluefield-3.

7. How is the generational compatibility of IB products?

IB products are naturally forward compatible, mainly through speed reduction. The 400G NDR ports on NDR switches can be reduced to 200G to connect to HDR network cards CX6 VPI 200G.

8. Do IB switches support Ethernet?

Currently, there are no switches that support both IB and Ethernet. According to IB standards, Ethernet data (IPv4, IPv6) can be communicated through IB networks in the form of tunnels (IPoIB).

9. Does CX-7 provide QSFP112 interface network card models?

The currently released 400G CX7 network card interface is OSFP. QSFP112 interface 400G network cards are in development, but no specific release information has been seen. CX-7 provides 200G QSFP112 interface network card models.

10. What's the difference between managed and unmanaged IB switches?

Each generation of IB switches typically has two main models. For NDR, for example, the QM9700 is a managed switch, while the QM9790 is unmanaged. The difference is that managed switches run NOS, similar to standard Ethernet switches, allowing direct login and configuration management through a dedicated management port and offering subnet manager functionality (optional); unmanaged switches, on the other hand, lack a CPU, do not run NOS, and are configured via remote configuration tools like mlxconfig.

11. Are the management functions of switches/network card's opensm subnet manager and UFM the same? What is UFM?

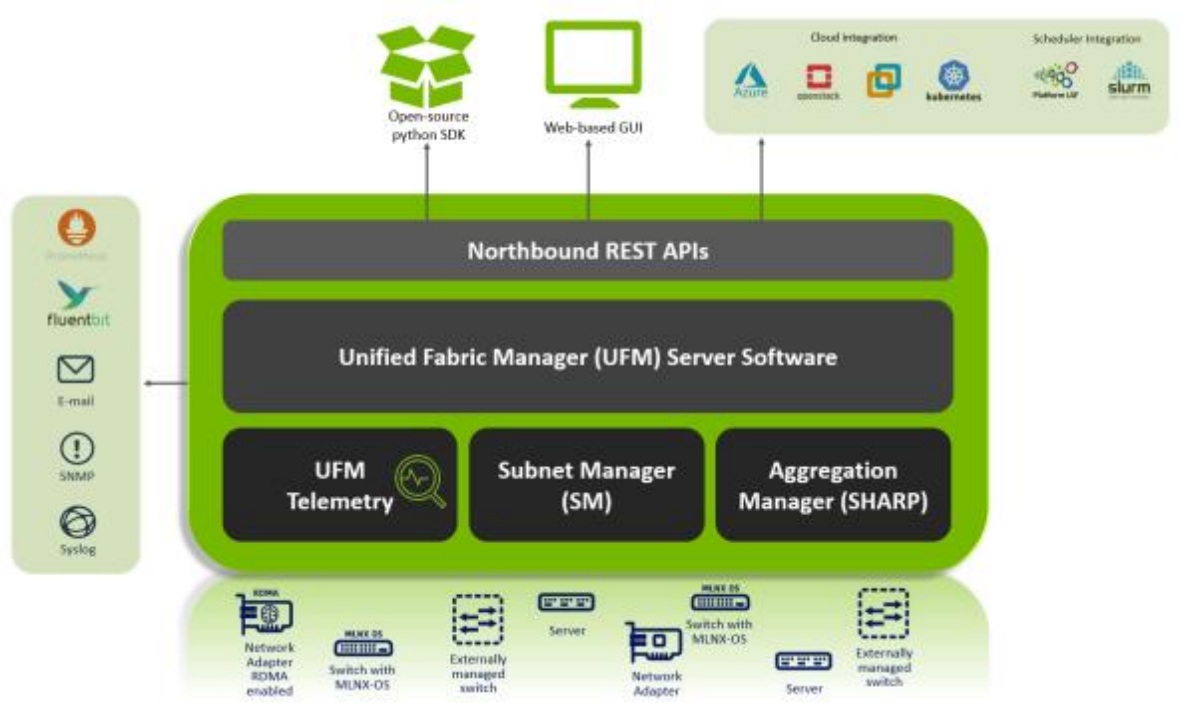

The subnet manager is an essential network controller for IB networks, including a wide range of functions such as network initialization, routing, partitioning, etc. It can be deployed on IB switches with management functions, on Linux servers with IB cards and OFED drivers, and is one of many components of UFM.

UFM is a comprehensive platform, with types like telemetry, Enterprise, Cyber-AI. Taking the most widely used UFM Enterprise as an example, it provides a web interface for entire network management, monitoring, and analysis. The subnet manager is just one of its components.

UFM can manage the entire IB network in-band and monitor end-to-end IB networks, including HCA (IB network cards), cables, and IB switches. The server deploying UFM needs to meet the requirements of UFM software (UFM Telemetry/Enterprise) and have an IB card for the in-band IB connection.

12. How is the UFM service connected in the DGX H800 SuperPOD?

In the DGX H800 SuperPOD scheme, the number of DGXs equals the number of SUs times 32 minus one. Therefore, one SU equals 31 DGXs, with one DGX left unconnected, freeing up a leaf switch port to connect to the UFM server.

13. Can the subnet manager of a managed switch display a graphical network topology?

Graphical management interfaces are mainly provided through UFM (Enterprise and Cyber-AI), including network topology-related functions. The MLNX-OS running on managed switches only offers local management web interface functionality.

14. If NDR 9700/9790 switches claim to have 64 400Gb ports, why do they only have 32 OSFP ports?

Due to size and power constraints, a 1U panel can only accommodate 32 cages (OSFP). Each physical OSPF interface actually provides two independent 400G interfaces, known as Twin port 400G.

15. Can UFM be used to monitor RoCE networks?

No, UFM only supports IB networks.

16. What are the differences among the various types of UFM?

UFM is divided into Telemetry/Enterprise/Cyber-AI:

- Telemetry supports command-line mode only, with no graphical interface, and provides whole network monitoring.

- Enterprise, the main recommended type, offers a web interface and whole network configuration, monitoring, and analysis capabilities.

- Cyber-AI includes the functionalities of Enterprise and integrates AI analysis capabilities.

For Enterprise and Telemetry, you can choose to purchase only the software, deploying it on servers that meet the software requirements. NVIDIA also provides corresponding UFM Appliance servers, combined with software deployment.

For Cyber-AI, it's an integrated server solution requiring specific hardware servers equipped with GPUs to support corresponding analysis and security features.

17. At what cluster scale is UFM recommended?

UFM is recommended for any IB network, not just for opensm, as it offers many powerful management and interface features. UFM is suggested whenever there is a network management requirement.

18. Is it possible to test UFM on a trial basis?

You can apply for a UFM Enterprise license trial through the website. Contact us or our NPN partners if needed.

19. Networking suggestions for L40S

Each L40S corresponds to a 200G network bandwidth requirement, which can be met by HDR, NDR200 IB, and 200GbE Ethernet interfaces. Networking scale depends on computing requirements. For larger-scale networks, IB networking is recommended, activating the SHARP feature for optimal cluster performance. Small-scale networks can use RoCE networks. (Once the Spectrum-X solution is officially released, the new generation of RoCE networks will support large-scale deployment.) Cloud users, tenants, and storage access are through BF3.

20. What does 'Rail optimized' refer to?

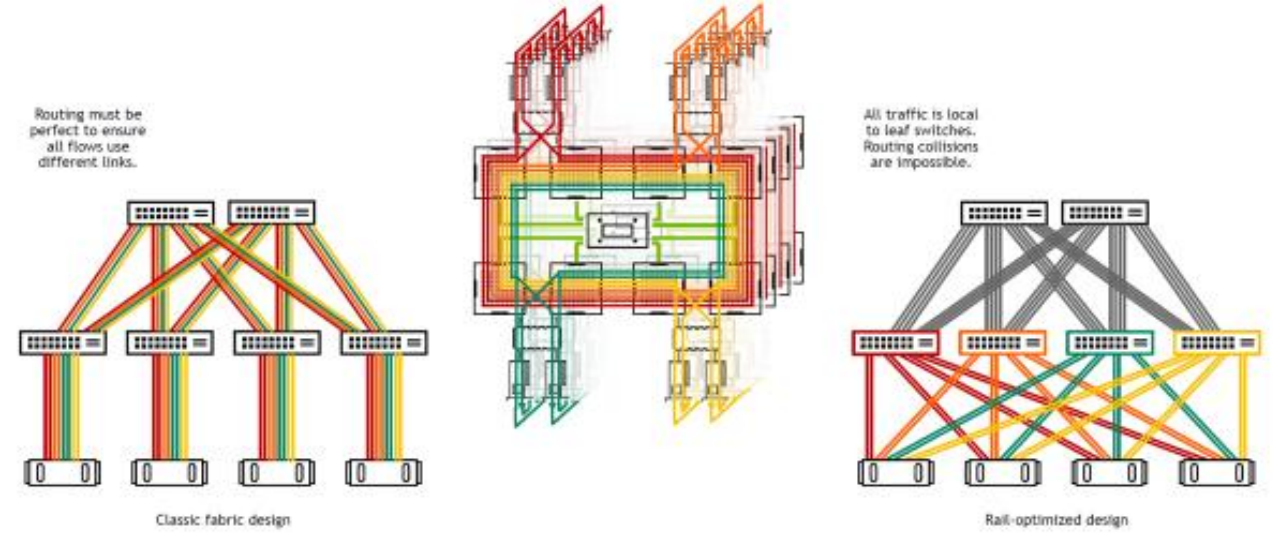

Rail optimized refers to optimizing the physical network connections of GPU services based on the logical topology generated by NCCL, ensuring the shortest physical paths for connections in the logical topology.

From a networking perspective, the 8 compute network interfaces of an 8-card server are each connected to eight leaf switches. Each corresponding interface of a GPU server, such as port 1, forms a rail, and the connections within this rail can communicate through the shortest physical path (one-hop leaf switch forwarding).

In practical applications, the NCCL logical topology is an overlay of multiple topologies, allowing full utilization of the compute network interfaces of the server, with each physical interface supporting a business flow within a rail. The following diagram compares the traditional TOR networking (left), NCCL logical topology (middle), and Rail Optimized networking (right). It's important to note that even after Rail-Optimized networking, inter-switch connections are still necessary for flexible resource scheduling and application, maintaining a balanced full connection across the network.

21. For the HGX H800, each Node has 8 cards; does each node need 8 ports connected to the switch?

Yes, following the Rail Optimized design, a configuration with 8 cards is needed to fully utilize the cluster's performance.

22. What is the maximum transmission distance supported by IB cables without affecting transmission bandwidth and latency?

Multimode modules and fibers can go up to 50m, FR4 single-mode modules can reach a maximum distance of 2km, and the longest single-mode MPO cable we can provide is 150m. DAC copper cables are limited to 3m, and ACC to 5m.

23. Can the CX7 network card in Ethernet mode interconnect with other manufacturers' 400G RDMA-supported Ethernet switches?

400G Ethernet interconnection is possible, and RDMA can run in this case as RoCE, but performance is not guaranteed. For 400G Ethernet, it is recommended to use the BF3+Spectrum-4 composed Spectrum-X platform.

24. Why are NDR optical cables separate, unlike HDR's integrated AOC cables?

Mixing single-mode and multimode, water and air cooling, and different lengths, an integrated AOC solution would be very complex and inflexible in deployment.

25. Are NDR compatible with HDR, EDR, and are these cables and modules only available in integrated form?

Yes, NDR forward-compatible connection solutions currently don't have separate products and mainly use the integrated AOC cable module solution.

26. Can the DGX H800 connect to HDR switches?

Yes, it can be supported with a corresponding 400G one-to-two Flaptop AOC cable.

27. What are the differences between OSFP interface modules on the network card side and the switch side?

The network card side uses Flaptop modules, and CX7 network cards use 400G OSFP modules. The switch side uses twin port 400G modules, which are finned top and thicker than the Flop top. The compute network interface (host side) of the DGX H800 also uses twin port 400G modules but as Flaptop, which differs from the switch modules in suffix -flt.

28. Does an IB card in Ethernet mode not support RDMA?

It does support Ethernet RDMA, also known as RoCE (RDMA over Converged Ethernet). For large-scale networks, NVIDIA's Spectrum-X solution is recommended.

29. Has BF3 gone into mass production?

Depending on the OPN, mass production timelines vary. Currently, the B3220 dual-port 200G model has been mass-produced.

30. Are the cables the same for 400G IB and 400G Ethernet, except for different optical modules?

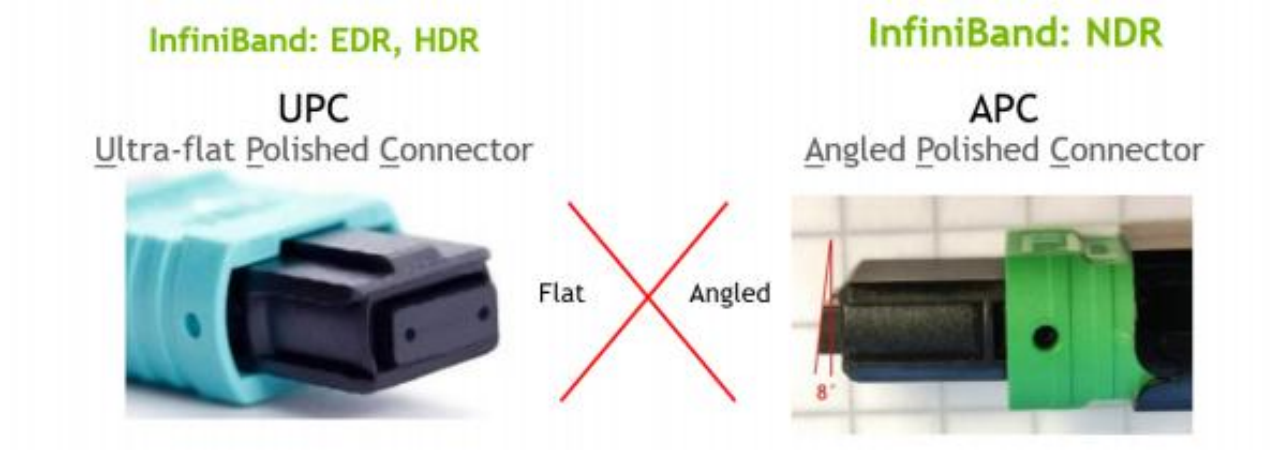

The optical cables are the same, but the OSFP and QSFP112 400G module optical interfaces are MPO-12 APC, which is an APC type with an 8-degree slant, different from the common 100G MPO UPC fiber.

31. The OSFP network card side uses OSFP-flat modules, right? Why is it mentioned as using OSFP-Riding Heatsink?**

Riding heatsink refers to a heatsink on the cage.

32. The NVIDIA MMA4Z00-NS400 is an InfiniBand (IB) and Ethernet (ETH) 400Gb/s, Single-port, OSFP, SR4 multimode parallel transceiver using a single, 4-channel MPO-12/APC optical connector. Can this module description be interpreted as automatically switching to Ethernet mode if the opposite switch is Ethernet, without needing to change the module?

Yes, this is correct in scenarios where both sides are NVIDIA network products.

33. Is PCIe 5 only 512G? What about PCIe4?

For Gen5, it's 32G16=512G, and for Gen 4, it's 16G16=256G.

34. Do IB cards have a concept of simplex or duplex?

They are all duplex. The concept of simplex or duplex is merely theoretical for current devices, as the physical channels for sending and receiving have been separated.

35. Is the largest 400G IB switch limited to 32 ports? What is the model?

The largest 400G IB switch is not limited to 32 ports. It has 32 cages but 64 ports. For the NDR generation, it's crucial to distinguish between the concepts of cage and port. The models are QM9700 and QM9790.

36. Are there networking issues with HGX from different suppliers?**

With the same supplier, the topology numbering is consistent, so there are no issues. However, with multiple suppliers, if the numbering is inconsistent, issues may arise and need to be unified. NCCL requires all nodes to have uniform numbering. The numbering of network cards is based on the physical topology of the host bus, so it may not correspond one-to-one with GPUs. Therefore, adjustments are necessary to ensure that the numbering can be recognized and corresponded by NCCL. You can refer to the following example for correcting the order of RDMA device names. This method is volatile and will reset upon reboot, so it should be included in the system startup script. Here's an example configuration:

`/opt/mellanox/iproute2/sbin/rdmadev set mlx5_0 name mlx5_temp`

37. What are the typical network card configurations for HGX, and what is the recommended approach?

HGX is a complete machine product developed by our OEM partners, and its network configuration can refer to DGX.